Data Challenges

Lore IO’s core offering revolves around data transformation and making the analytics-ready data available to customers for solving their business use cases. Lore IO’s primary requirement for any new project is to centralize customer data in a data warehouse. As customers use multiple data sources (databases and SaaS applications) and data warehousing systems, building integrations with a variety of tools is a time-consuming and tedious process.

Here’s the standard operating procedure that Lore IO follows while onboarding a new customer:

- Map out the customer’s data sources and destinations

- Address security concerns

- Develop an ingestion pipeline

- Carry out the required data transformations

In their onboarding process, the third step proved to be time-consuming as it took at least a month to develop an ingestion pipeline for just one data source.

For every new customer, we were internally building connectors by writing complex code, which was unreliable and took a lot of engineering bandwidth. It used to take a platform engineer at least a month’s time to develop a connector with a streaming pipeline and a lot more time maintaining it. This was proving to be a big problem for a startup like ours, owing to limited resources, and we were not able to focus on our core product offering.

The Solution

Before Hevo, Lore IO had been using custom Python scripts to integrate data, in order to extend support to more sources and destinations, they would have had to expend a significant portion of their resources developing an in-house solution, deviating from their core offering.

Building an in-house data pipelining solution would have taken a lot of engineering time for us and would have not provided any value as this is not our area of focus. We tried a variety of tools, from writing our own scripts, using Spark, or other streaming data transfer products, but none of those were as simple and reliable as Hevo.

Before finalizing with Hevo, Maurin had very clear expectations from the data integration tool, in that it must:

- Support as many sources and destinations as possible

- Be easily deployable without the involvement of a data engineer

- Scale to millions of events

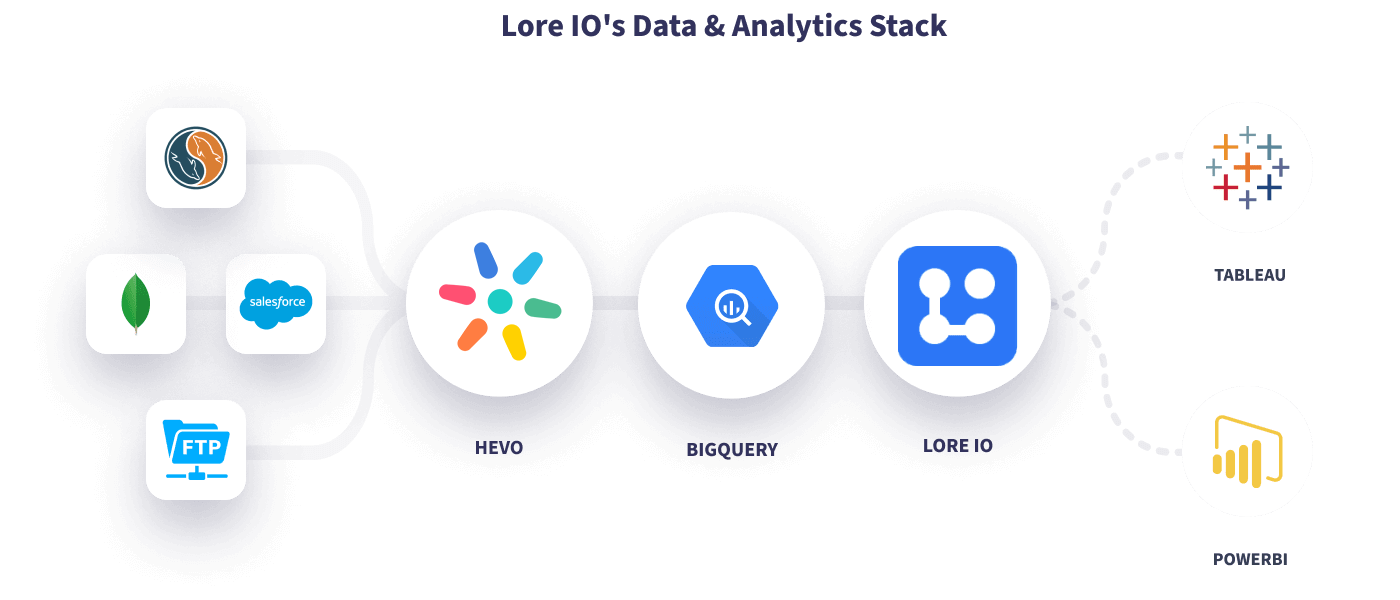

Using Hevo, Lore IO has built data pipelines to connect data sources such as Salesforce, MongoDB, and MySQL to the BigQuery warehouse. After the data gets centralized in BigQuery, it gets transformed on Lore IO’s platform and ultimately exported to BI tools like Tableau or PowerBI for analytics and reporting.

Key Results

Lore IO has seen a drastic improvement after switching to Hevo and automating its data integration process. Their platform engineers literally saved months of time, which they are now investing in building more features to power their platform.

We have almost 20 active pipelines on Hevo processing 100,000+ records each day without any data issues. We always get 100% accurate data with no latency. As Hevo does all the heavy lifting, we can now literally onboard any customer in just a week’s time. This is just amazing! Hevo is extremely easy to use and robust. It processes data faster than any other tool available in the market.

Lore IO is expanding its presence in the life sciences industry and recently announced Lore IO Life Sciences Cloud Analytics Packages, which includes clinical operations, pre-commercial, medical affairs, and commercial operations. These analytics packages enable emerging biopharma organizations to improve data management, become more agile, and plan a more successful product launch. They’re also planning to expand their engineering vertical and thinking of connecting to new data warehouse destinations. Hevo is proud to play a pivotal role in simplifying Lore IO’s customer onboarding process and looking forward to taking this growth to a new level with unified data.

Excited to see Hevo in action and understand how a modern data stack can help your business grow? Sign up for our 14-day free trial or register for a personalized demo with our product expert.